Tacit knowledge and a multi-method approach in Asset Management

|

Giovanni Moura de Holanda[1] FITec – Technological Innovations |

Jorge Moreira de Souza[2] |

|

Cristina Y. K. Obata Adorni[3] FITec – Technological Innovations |

Marcos Vanine P. de Nader[4] FITec – Technological Innovations |

______________________________

Resumo

This paper has two main objectives. The first one is to reflect on the validity of data in analysis and projections that underpin the engineering asset management of organizations, considering, on the one hand, a certain resistance or even inadequate use of data and information of a subjective nature and, on the other hand, a consolidated reliance on quantitative approaches and decisions based on data series. The second objective is to contextualize the applicability of combining qualitative data based on experts’ experience and on the tacit knowledge built in organizations, with approaches based essentially on quantitative data, according to the data availability and the decision scenario over the asset life cycle. We present two application of an approach combining quali and quanti data. One example interrelates Statistics with Psychology and the other combines elicitated data about asset indicators with parameters deterministically calculated. Both applications aiming at providing more realistic indicators and supporting the Asset Management process to make more assertive decisions.

Keywords: Tacit knowledge. Qualitative and quantitative data. Elicitation. Asset management. Decision making.

CONHECIMENTO TÁCITO E UMA ABORDAGEM MULTICRITÉRIO EM GESTÃO DE ATIVOS

Resumo

Este artigo tem dois objetivos. O primeiro é o de refletir sobre a validade dos dados em análises e projeções que apoiém decisões relativas à gestão de ativos físicos, considerando, por um lado, uma certa resistência ou mesmo um uso inadequado de dados e informações de natureza subjetiva e, por outro lado, uma confiança consolidada em dados e abordagens quantitativas. O segundo objetivo é o de contextualizar a aplicabilidade de combinar dados qualitativos baseados na experiência de especialistas, e no conhecimento tácito existente nas organizações, com abordagens baseadas essencialmente em dados quantitativos, conforme a disponilidade dos registros e os cenários de decisão ao longo do ciclo de vida do ativo. Nós apresentamos também duas aplicações de uma abordagem que combina dados quanti e qualitativos. Um desses exemplos inter-relaciona Estatística e Psicologia, e o outro reúne dados elicitados sobre indicadores dos ativos com parâmetros calculados de forma determinística. Ambas as aplicações objetivam prover indicadores mais realísticos e com isso possibilitar decisões mais assertivas no processo de gestão de ativos.

Palavras-chave: Conhecimento tácito. Dados qualitativos e quantitativos. Elicitação. Gestão de ativos. Tomada de decisão.

1 INTRODUction

The Asset Management (AM) has been present in the technical and managerial corpus of organizations in virtually every sector of the economy. Metrics, indicators, analytical methods, and data collection – a flood of data – make up the framework that feeds initiatives and systems aimed at supporting decisions about the assets of a company (KAUFMANN, 2019; HOMER, 2018).

The multiple agents of the productive society can benefit from this analytical support in terms of how to manage physical and intangible assets that enable organizations to generate and deliver value to their customers. Such a need is decisive regarding financial performance and gains more pressing dimensions in the case of organizations with intensive capital, which require significant investments to purchase or replace equipment and whose operational performance is regulated according to the impacts resulting from the interruption of their services. This is the case, for example, of electricity utilities (HOLANDA et al., 2019; NIETO et al., 2017), and utilities in general (CARDOSO et al., 2012).

In recent decades there has been a technical and methodological maturation, accompanied by an increase in value perception, that made it possible to place AM on the agenda of organizational actions worldwide. Large-scale sensors, capacity to storing and processing large volumes of data, ubiquitous digitization, Artificial Intelligence mechanisms, intelligent networks, international standards of management systems, requirements for sustainable development and above all an increasing credibility in decisions based on data and analytical synthesis have outlined a new organizational habitus.

Despite this technological advancement, a question gains sharper contours: how to gather the necessary data, and what to do with this data to systematically support decisions throughout the asset lifecycle – for issues related to life cycle, see, for example, (HAUSCHILD et al., 2018). Obviously, this issue regarding the selection, preparation and use of data goes through a methodological aspect that is inherent to the AM process in its completeness. Even with the huge data volume available today, organizations are faced with the basic need to select and cleanse them, besides covering gaps and solving inconsistencies so that they can be applied to the methodology implemented in analysis and decision support systems. The data quality is fundamental for this purpose – cf. (CICHY; RASS, 2019).

It is quite often not found in assets’ historical bases some essential data to AM, especially regarding to looking to the future and supporting decisions and long-term planning. Synthetic data may address part of this need, by using techniques like machine learning and data elicitation – the latter based on the practical impressions of experts. However, elicitation process rests on a cultural aspect that deserves reflection, problematized in big data times, on the validity of data of a subjective nature amid growing and consolidated confidence in the quantitative scientific treatments adopted in data-driven analyses. By way of illustration, a survey conducted with decision makers of the Oil & Gas industry shows that almost half of respondents never or rarely recommend qualitative analysis of investment options for important decisions and that more than half recommend probabilistic analysis (BIKEL; BRATVOLD, 2008).

Such a condition goes back to the recurrent epistemological dualism between scientific knowledge and tacit knowledge, between the validity of data obtained from qualitative approaches and those obtained with quantitative treatment, or between subject and object in the construction of knowledge. This may lead to some uncertainties as to how to analytically be instrumentalized in order to make decisions, mainly for planning and major investments.

An exacerbation of this epistemological cleavage can lead organizations to neglect the tacit knowledge elicited and avoid approaches that try to overcome the limitations found in classic models of quantitative orientation. And more, what to say about the use of elicitations in face of the digital AM, of digital twins able to simulate the operational behavior of an engineering asset, in a varied way and in real time? Is it possible to combine data-driven approaches with analyses that consider uncertainties, risks and lack of data?

It is precisely about these crucial aspects in methodologies aimed at the asset life cycle management that we make here some considerations, highlighting some concepts, visions on the ideal of objectivity and methodological perspectives that combine methods and approaches to provide analytical alternatives for managing assets.

One goal of this paper is therefore to reflect on the validity of data for supporting decisions in engineering asset management, by considering either a certain resistance or even inadequate use of subjective information and a consolidated reliance on quantitative approaches. Another objective is to address the applicability of combining the experts’ experience and the tacit knowledge built in organizations with approaches based essentially on quantitative data. Moreover, by absorbing existing data in the organization and extending the over the asset life cycle, with all events and multidimensional impacts to which they are subjected to.

The paper is structured as follows. Section 2 presents some notes on the episteme underlying the construction of tacit knowledge, in order to substantiate the reflections and points of view raised in the subsequent argumentation. In Section 3 we detach some works found in the literature which share a similar view and introduce the foundations of the multi-method approach we have been adopting to support decisions in AM.

This section also includes two examples of approaches combining quali and quanti data, seeking to illustrate the application of the multi-method approach. The first example interrelates Statistics with Psychology, applying variance/covariance analysis and multivariate technique on survey responses (opinions) expressed in scorecard method. The second example combines indicators expressed by experts’ subjectivity with parameters deterministically calculated, more specifically, an asset indicator like MTBF (Mean Time Between Failure) is estimated by elicitation, while standard deviation is quantified based on qualitative scores in order to quantitatively derive Reliability indicator. Both approaches exemplified enable to build scenarios and perform sensitivity analysis to support decisions in AM.

Finally, Section 4 summarizes and brings the paper to a closure.

2 Some notes on tacit and informal knowledge

For Polanyi, the knowledge underlying explicit knowledge is more primary and fundamental, given that all knowledge is tacit or based on it (1959). In accordance with this conception, tacit knowledge is formed by a technical component, based on personal skills and practice, and a cognitive component expressed, for instance, on tips, intuitions, and beliefs. In a categorization of three possible sorts of knowledge, Lars-Göran Johanson (2016), classifies practical or tacit knowledge as that which cannot be transmitted only by means of language, which requires both demonstration and practice and forms the basis for much of professional knowledge.

Tacit knowledge and explicit knowledge are complementary and the tacit presents itself as an alternative to the “ideal of objectivity” and becomes explicit when articulated by language. Many authors committed to a new epistemology have produced a multitude of studies on issues that include or depart from this cleavage.

Edgar Morin understands that four anchors of science provide the progress of knowledge: empiricism, rationality, verification, and imagination (2004). Further, following the basic principles of complex thinking, the construction of knowledge includes uncertainty, error, subjectivity and contradiction, among other conceptions. Such a construction takes place on dialogical bases, including therefore voices that report multiple perspectives and experiences of those who experience the issue.

The fact of complementing the data (without any mediation) with collective thinking, contrasting “cogito” to “cogitamus”, as Latour proposes (2010), it is a way to overcome the lack of data and include multiple perspectives represented by the thinking of various actors involved in the AM process. It is not a case of opposing rhetoric and science, or to compare weak eloquences (epideixis) versus strong eloquences (apodeixis), but to unite them in favor of a sum that is greater than the junction of its parts, to make them work together especially when the most desirable (the one that can be measured and verified formally) is not complete. However it can be a way of avoiding the polarization of a world divided in half, which excludes non-articulated knowledge from the corpus of science, even if this division is in search of a new scientific spirit, how treats Bachelard (1978), in his epistemology.

From Morin's perspective (2004), it is necessary to maintain the two poles of knowledge construction – the subjective and the objective – to seek an understanding of how complex relationships between them are established. Historically, the subjective side has undergone epistemological questions, not being considered by many as an object of the Science and, however, it can gain meaning and consistency if considered methodically. This aspect can be considered, for example, when using data elicitation. Moreover, Morin reminds the importance of understanding that incompleteness and the existence of blind spots are conditions of knowledge.

With the increasing use of qualitative research by scientific communities, epistemological and methodological issues related to this approach have proliferated (WHITTEMORE et al., 2001). Guba and Lincoln have been addressing this issue from various perspectives and criteria of merit and validity of the naturalistic paradigm (associated with qualitative research, according to the terminology used by them) – see, for example (1982).

The tension between the validity of the results obtained by qualitative and quantitative approaches has therefore been stressed for a long time. Indeed, formal and informal knowledge, explicit or tacit, (in various formats, lessons learned and contextualized experience) can be combined, composing organizational knowledge (O’LEARY, 1998) on which AM operates. Dynamically, this knowledge can and should be continuously evaluated when subjected to use, considering systematic methods that can ensure validity and bring confidence to managers.

3. Joining methods

An option to bridge the aforementioned dichotomy and add the best that each side has is to combine methods and techniques in an integrated and interrelated manner, like an “ecology of methods” or a multi-method approach. By narrowing the distance between subject and object, a multiple perspective and multidimensional approach allows to contextualize humans, technologies, and nature in a unique field of study or, why not say, in the same habitat. Ecology, technology and data science share dynamic properties: they are essentially complex and work based on information circularity. In this sense, we adopt herein the “ecology” metaphor to illustrate a holistic effort to interrelate theories, methods and techniques aiming at gathering, transforming, and analyzing complex data and information.

The literature presents numerous possible alternatives for tracing this methodical line of integrating approaches – for example (ALVES; HOLANDA, 2016), concerning general applications; (ROGERS, 1995), amalgamating human behavior, probability, marketing and innovations; and (GALAR et al., 2014), applied to asset management. In relation to human behavior, some different kinds of data and analytical approach tend to be naturally merged when the matter is about decision-making. People establish beliefs about the odds: they designate values (or utilities) to results and combine these beliefs and values, compounding preferences about risk options involved in a decision. Beliefs can be systematically wrong in a number of ways and such errors are called biases – cf. (OGUSHI et al., 2004).

Tversky and Kahneman deepened the effects of these behavioral factors in a comprehensive way, considering that in uncertainty situations people make decisions more based on heuristic and cognitive biases than on probability estimations and theoretical models, in a manner that usually does not lead to the best choices –cf. (TVERSKY; KAHNEMAN, 1974; KAHNEMAN, 2011). Taking into account such ideas, Watson and Floridi (2020), in their approach of iML (interpretable Machine Learning), propose a system in which a software supports an individual to face such a bounded rationality.

Another example of integrated approach is presented by (OPPERHEIMER et al., 2016) in terms of quantifying uncertainties in models used for climate change projections. The solution adopt by them was probabilistic inversion, eliciting probability distributions from experts in order to adjust the model.

In a study that considers the advances of Analytics due to the strengthening of Big Data, Jansen et al. (2020) investigate how creating data-driven personas can be leveraged as analytical tools to understand users, compared to the traditional process of creation based on questionnaires, interviews and focus groups. The authors conclude that the combination of personas and analytics brings together the strengths of each approach, making it possible to compensate for the deficiencies of one separately. Also considering big data approaches, Campos et al. (2017) suggest the use of a modified Balanced Scorecard, focusing attention to both quantitative and qualitative measures.

From the point of view of elicitation techniques properly speaking, there are some studies indicating how to select appropriate techniques for certain organizational contexts, runtime and available resources – see, for example, (HICKEY; DAVIS, 2003) and (TER BERG et al., 2019). These latter authors present an approach that combines the opinions of experts to generate a distribution that allows probabilistically estimating future conditions related to the deterioration of structural assets.

Methods for predicting the performance of engineering assets over their life cycle in situations where historical data is limited may include elicitation. In order to ensure greater precision of the elicited data, probabilistic models can be aggregated, for instance, Bayesian hierarchical modeling and Markov Chain-based algorithms (HESSAMI, 2015), translating into more rigorous approaches to estimate the behavior of assets from the knowledge of experts. This type of combination of methods also makes it possible to structure the semi-structured data obtained by elicitation. Without trying to be exhaustive, but for the sake of illustration, other approaches make different combinations, e.g., methods for adjustment of biases considering the merge of empirical evidence and opinion in a Bayesian analysis (RHODES et al., 2020), and Markov decision processes with elicitation (ALIZADEH, 2016).

Another possibility of quali-quanti integration, combining Statistics with Psychology, is to apply standard variance/covariance analysis on answers to survey questionnaires with Likert scale or scorecard methods, in verbal descriptions inserted in extremes that differentiate levels of perception intensity. Other method that can join this integration is Cronbach’s Alpha, estimating the reliability or internal consistency of a questionnaire – cf. (TABER, 2018).

After presenting this short argumentation on multi-method approach, detaching some references from literature, two examples combining elicitation and quantitative data in AM are presented as follows. These study cases are part of our experience and can help to illustrate the central reflection proposed in this paper.

3.1 Quali-Quanti integration

Quantitative methods are well adapted for maintenance planning as far as reliability and cost data are recorded and easily available along the asset life. Even in this case the assets are spread in many sites and submitted to different operational conditions which rends the usual population analysis to predict reliability and cost trends quite imprecise. For maintenance management this is not a real problem as the operational staff is mainly responsible to monitor the performance of the site. In this scenario asset management is generally based on the group expertise of the many operational staffs scoring their opinion to have a general view of the performance considering all sites. It covers the analysis of sensible performance attributes in order to decide the planning measures and the execution time.

Risk analysis methods support the asset management decisions. They are based on a set of asset attributes which represents the risk to the business. For example: time to failure of an asset, aging, repair or replacement, time to repair, repair cost, etc., trying to quantify the trade-off between risk and cost. Scorecard method is a composite index that relies on the weights given by the experts which represent their feeling of the attribute criticality. The final score quantifies the criticality of each asset.

Scorecard method, as it aggregates several attributes, is criticized as it can lead to a loss of information and misinterpretation (GALAR et al., 2014). Another issue is the choice of the correct weights that is a subjective (qualitative) approach. The proposal in this paper is to develop jointly a method to correlate quantitative results with the subjective weights and final score. This method should consider all the attributes and derive quantitative weights to derive a quantitative score allowing a full quali-quanti analysis.

Principal Components Analysis (PCA) is a multivariate technique that can provide objective (quantitative) weights through the evaluation of the eigenvalues of the many attributes. PCA is generally employed to reduce the number of attributes by detecting those that are correlated and can be deleted with small loss of information – see (DUNTEMAN, 1989) for details. The weight of the importance of each attribute is quantified by the eigenvalues. This technique is suggested in (GALAR et al., 2014) as a possible aggregation method.

As practical examples illustrating the quali-quanti combination, subsection 3.2 depicts how PCA can be used in harmony with scorecard to quantitatively detect some pitfalls that would need additional investigation. The second example, addressed in subsection 3.3, uses the same attributes of the first example to propose a quantitative method to derive the failure distribution of an asset based on the experts scores. By means of the two examples, it is possible to highlight the feedback aspects that this joint approach may provide.

3.2 Case 1: Quali-quanti analysis of an elicitation for AM

This is a real example of elicitation (quali analysis) where 209 items of a big site production to weight its failure probability. Four atributes, typical of reliability and risk analyses related to asset replacement, are considered:

• Time to failure: 1 to 5 as time to failure decreases,

• Degradation: 1 to 5 as the item presents a higher degradation,

• Replacement: 1 to 5 as the replacement is more urgent,

• Expert feeling: 1 to 5 as the risk increases.

In the elicitation, the numerical classification of the attributes observes the following correspondence: 1 (very low), 2 (low), 3 (medium), 4 (high), 5 (very high).

The composite index “failure probability” weights the attributes considering the “expert feeling” as the most important (large weight). The question in this example is the analysis of the coherence (quanti) between the “expert feeling” and the other attributes. PCA is applied to the first three attributes and the eigenvalues ratios are: 72,3%, 16,2% and 11,5% respectively. This suggests that the scale items can be considered unidimensional highly impacted by the “time to failure”.

The next step is to derive the quantitative “expert feeling” using the PCA eigenvalues ratios. Based on a few runs of this approach we arbitrated an initial threshold to characterize situations which require more detailed analysis, i.e., if the “expert feeling” score is not between -20%, +20% of the PCA score the item is investigated. By applying this approach, from the sample of 209 items, 31 need investigation. Table 1 shows a small sample of items that should be investigated in more details, corresponding to the elicitation process of a diesel engine.

|

Table 1 - Specification of the diesel engine: elicitation and correspondent scores |

||||||||||||||||||||||||||||||

|

||||||||||||||||||||||||||||||

|

Source: elaborated by the authors. |

As can be noted in Table 1, the quantitative analysis suggests that those five items should be revisited, considering an incoherence in their qualitative score. For this example, it can be seen how quantitative treatment can modulate the analysis of a qualitative survey of information, pointing outliers or data that deserve more detailed investigation.

3.3 Case 2: An example using Failure Time Distribution

Failure time distribution is used to estimate the reliability of an item and the time of replacement assuming a given reliability risk. The proposed method in this example shows how to quantitatively derive the reliability based on the qualitative attribute scores. It is based on the first two attributes of the previous example, namely: Time to failure, Degradation. The reliability evaluation allows defining the time to replacement for a given risk.

The proposed method to derive the distribution function needs two parameters: the MTBF (Mean Time Between Failures) and the standard deviation. The MTBF is estimated by the elicitation process while the standard deviation should be quantified based on the qualitative scores. For mechanical equipment, the standard deviation is lower than the mean diminishing with the degradation time. Table 2 presents the qualitative score values and the proposed standard deviation as a percentage of the MTBF.

|

Table 2 - Qualitative scores for MTBF |

||||||||||||||||||||||||||||||

|

||||||||||||||||||||||||||||||

|

Source: elaborated by the authors. |

As far as the distribution function is not known a priori, it is proposed a weighted model combining constant and non-constant failure rates. The distribution function Fam (t) is derived weighting two functions: The Exponential, Exp, distribution (no deterioration) and the Normal, N (μ, σ), distribution function (Gamma function could be used as well), as expressed in (1).

|

|

(1) |

where p is the weight parameter

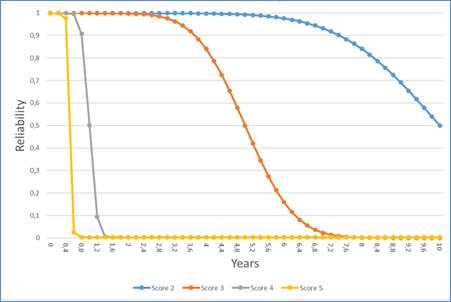

The weight parameter is the confidence level of the Normal function, considering the mean and three times the standard deviation, p = 99.5. In other words, 99.5% of the failures will occur in the interval defined by the mean plus/minus three times the standard deviation. The reliability Ram (t) = 1 – Fam (t) expresses the asset survivability as a function of the time and is presented in Fig. 1 for each score level. Considering asset survivability in the range [10%, 90%], the time to replace is: score 2 > 8 years; score 3, 4 to 6 years; score 4, 1 to 1.5 years and score 5 < 1 year. Considering the quantitative estimates of the Table 2, the qualitative estimation of the time to replace presents a high risk to failure mainly for the scores 4 and 5, regarding the quantitative range of scores presented above.

|

Figure 1 - Reliability plots for the scores. Source: elaborated by the authors |

|

|

This second example also highlights the analytical gains that we can achieve when combining quali-quanti approaches: elicitation about replacement time in the last two rows of the Table 2 should be reconsidered. In this specific case the interrelation between qualitative e quantitative methods occur in more than one stage, showing how they can be synergistically intertwined.

4. Summary and conclusion

In this paper, we reflect on aspects related to the validity of data in analysis and projections that underpin the engineering asset management of organizations, considering, on the one hand, a certain resistance or even inadequate use of data and information of a subjective nature and; on the other hand, a consolidated reliance on quantitative approaches and decisions based on data series.

Some epistemological issues were put forward to consider the possibility of properly combining qualitative data based on experts’ experience, and on the tacit knowledge built in organizations, with approaches based essentially on quantitative methods. The quali-quanti investigation may be seen not in terms of the validity of analysis, but as a possibility of obtaining applicable knowledge from something that can be thought of as being both subjective and objective, beyond conventions: the information.

Two examples of approaches combining quali and quanti data were presented, allowing to illustrate the analytical potential argued here. Useful qualitative methods like score card based on weighted opinion of the experts can be correlated with quantitative weights based on the analysis of the data using techniques such as Principal Components Analysis. This approach can detect the situations where scores should be revisited as the quali-quanti scores disagree. Such approaches point out how it can be useful to link data elicitation with quantitatively calculated parameters and thus estimate key indicators and support decisions.

Possible differences between qualitative assessments – based on the tacit knowledge built by specialists – and quantitative data deserve a specific study to understand its underlying causes. For example, the extent to which perceptual deviations related to an asset being qualitatively evaluated can be weighted and, conversely, how much the subjective experience can explain deficiencies of quantitative approaches regarding certain aspects not revealed by the calculated indicators. This is a possible theme for further studies related to transdisciplinary challenges and the combination of methods in asset management.

An aspect that is pointed out in the literature, and that we also see as a barrier to a broader application of combined quali and quanti approaches, is the difficulty of bringing together experts and structuring their tacit knowledge on a regular basis. Systematic procedures for this require experts' time and the existence of a team able to apply methodological routines continuously, other than unique efforts carried out in an ad hoc manner.

Despite practical difficulties and the epistemological controversies that were rudimentary introduced in this paper, the joint alignment of approaches, as in an ecology of methods, can be undertaken as an effort to provide beaconed information and insights to support decisions over the asset life cycle. Often it may be a feasible alternative to make decisions in situations of scarce or simply inconsistent data.

REFERÊNCIAS

ALIZADEH, P. Elicitation and planning in Markov decision processes with unknown rewards. Paris: Université Sorbonne Paris Cité, 2016.

ALVES, A. M.; HOLANDA, G. M. “Liquid” Methodologies: combining approaches and methods in ICT public policy evaluations. Revista Brasileira de Políticas Públicas e Internacionais, v. 1, n. 2, p. 70–90, 2016.

BACHELARD, G. A filosofia do não; O novo espírito científico; A poética do espaço. Seleção de textos de José Américo Motta Pessanha; Traduçãos de Joaquim José Moura Ramos et al. São Paulo: Abril Cultural, 1978. (Os Pensadores). Inclui vida e obra de Bachelard.

BIKEL, J. E.; BRATVOLD, R. B. From uncertainty quantification to decision making in the Oil and Gas industry. Energy Exploration & Exploitation, v. 26, n. 5, p. 311-325, 2008.

BOURDIEU, P. Les trois états du capital culturel. Actes de la recherche en sciences sociales, v. 30, p. 3-6, 1979.

CAMPOS, J.; SHARMA, P.; JANTUNEN, E.; BAGLEE, D.; FUMAGALLI, L. Business performance measurements in Asset Management with the support of big data technologies. Management Systems in Production Engineering, v. 25, n. 3, p. 143-149, 2017.

CARDOSO, M. A.; SILVA, M. S.; COELHO, S. T.; ALMEIDA, M. C.; COVAS, D. I. C. Urban water infrastructure asset management – a structured approach in four water utilities. Water Science & Technology, v. 66, n. 12, p. 2702-2711, 2012. Doi: 10.2166/wst.2012.509

CICHY, C.; RASS, S. An Overview of Data Quality Frameworks. IEEE Access, v. 7, p. 24634-24648, 2019.

DUNTEMAN, G.H. Principal Components Analysis. SAGE University Paper, 1989.

GALAR, D.; BERGES, L.; SANDBORN, P.; KUMAR, U. The need for aggregated indicators in performance asset management. Eksploatacja i Niezawodnosc: Maintenance and Reliability, v. 16, n.1, p. 120-127, 2014.

GUBA, E. G.; LINCOLN, Y. S. Epistemological and Methodological Bases of Naturalistic Inquiry. Educational Communications and Technology Journal (ECTJ), v. 30, p. 233-252, 1982. Doi: 10.1007/BF02765185

HAUSCHILD, M. Z.; ROSENBAUM, R. K.; OLSEN, S. I. (eds.). Life Cycle Assessment – Theory and Practice. Springer, 2018.

HESSAMI, A. A Risk and Performance-based Infrastructure Asset Management Framework. 2015. Doctoral dissertation (Doctor of Philosophy) – Texas A & M University, Texas, 2015.

HICKEY, A. M.; DAVIS, A. M. Elicitation technique selection: How do experts do it? IEEE INTERNATIONAL REQUIREMENTS ENGINEERING CONF., 11 TH., 2003. Proceedings [...]. Monterey Bay, California, USA

HOLANDA, G. M.; ADORNI, C. Y.; MARSOLA, V. J.; PARREIRAS, F. S.; SARAIVA, T. F., GODOY NETO, O.; AZEVEDO, C. Aggregating Value in Asset Management: An Approach for Electric Generation and Transmission Utility. BRAZILIAN TECHNOLOGY SYMPOSIUM, 5TH., 2019. Proceedings [...]. Campinas, Brazil. (BTSym19).

HOMER, M. Unlocking the value of asset data. Assets: The Institute of Asset Management magazine, August, p. 10-11, 2018.

JANSEN, B. J.; SALMINEN, J. O.; JUNG, S. G. Data-driven personas for enhanced user understanding: Combining empathy with rationality for better insights to analytics. Data and Information Management, v. 4, n. 1, p. 1-17, 2020.

JOHANSSON, L. G. Philosophy of science for scientists. Springer, 2016. Doi: 10.1007/978-3-319-26551-3

KAHNEMAN, D. Thinking, Fast and Slow. Penguin, 2011.

KAUFMANN, M. Big Data Management Canvas: A Reference Model for Value Creation from Data. Big Data Cogn Comput, v. 3, n. 1, p. 1-19, 2019. Doi: 10.3390/bdcc3010019

LATOUR, B. Cogitamus: seis cartas sobre as humanidades científicas. Tradução de Jamille Pinheiro Dias. São Paulo: Ed. 34, 2016. Tradução de: Cogitamus: six letres sur les humanités scientifiques. La Découverte, 2010.

MORIN, E. O Método 3: o conhecimento do conhecimento. Sulina, 2004.

NIETO, D.; AMATTI, J. C.; MOMBELLO, E. Review of Asset Management in distribution systems of electric energy – implications in the National context and Latin America. INTERNATIONAL CONFERENCE ON ELECTRICITY DISTRIBUTION. 24TH., 2017. Proceedings [...]. Glasgow, 12-15 June 2017.

OGUSHI, C. M.; GEROLAMO, G. P.; MENEZES, E.; HOLANDA, G. M. A economia comportamental e a adoção de uma inovação tecnológica no cenário de telecomunicações. Simpósio de Gestão da Inovação Tecnológica. 23., 2004. Proceedings [...]. Cutiriba, 2004. p. 2350-2365.

O’LEARY, D. E. Enterprise Knowledge Management. IEEE Computer, v. 31, n. 3, p. 54-61, 1998.

OPPENHEIMER, M.; LITTLE, C. M.; COOKE, R. M. Expert judgement and uncertainty quantification for climate change. Nature Climate Change, v. 6, p. 445-451, 2016. Doi: 0.1038/NCLIMATE2959

POLANYI, M. The study of man. Chicago: The University of Chicago Press, 1959.

RHODES, K. M.; SAVOVIC, J.; ELBERS, R.; JONES, H. E.; HIGGINS, J. P. T.; STERNE, J. A. C.; WELTON, N.J.; TURNER, R. M. Adjusting trial results for biases in meta-analysis: combining data-based evidence on bias with detailed trial assessment. J Royal Statistical Society, v. 183, Part 1, p. 193-209, 2020.

ROGERS, E. M. Diffusion of Innovations. Fourth Edition, The Free Press, 1995.

STENGERS, I.; PRIGOGINE, I. La nouvelle alliance: Métamorphose de la science. Paris: Gallimard, 1979.

TABER, K. S. The use of Cronbach’s Alpha when developing and reporting research instruments in science education. Res. Sci. Educ., v. 48, p. 1273-1296, 2018.

TER BERG, C. J.; LEONTARIS, M.; VAN DEN BOOMEN, M.; SPAAN, M. T. J.; WOLFERT, A. R. M. Expert judgement based maintenance decision support method for structures with a long service-life. Structure and Infrastructure Engineering, v. 15, n. 4, p. 492-503, 2019.

TVERSKY, A.; KAHNEMAN, D. Judgment under Uncertainty: Heuristics and Biases. Science, v. 185, p. 1124-1131, 1974.

WATSON, D.S.; FLORIDI, L. The explanation game: a formal framework for interpretable machine learning. Synthese, v. 198, p. 9211–9242, 2020. Doi: 10.1007/s11229-020-02629-9

WHITTEMORE, R.; CHASE, S. K.; MANDLE, C. L. Validity in Qualitative Research. Qualitative Health Research, v. 11, n. 4, p. 522-537, 2001. Doi: 10.1177/104973201129119299.